TCP – TRANSMISSION CONTROL PROTOCOL

Posted on |

TRANSMISSION CONTROL PROTOCOL (TCP)

Fundamentals of TCP Operation

- Connection setup and teardown

- Multiplexing

- Data transfer

- Flow control

- Reliability

- Precedence and security

TCP can be thought of as the Fed-Ex of protocols, which boasts “When it absolutely, positively has to get there overnight!” In other words, it guarantees delivery of packets. TCP actually can boast a speedier delivery of packets than Fed-EX; however, TCP still remains slower than UDP.

Achieving such a high standard of delivery involves overhead in the form of establishing, maintaining, and terminating sessions between hosts. Unlike connectionless protocols, TCP does not rely on lower layers to track data. TCP does not limit itself by only identifying the sending and receiving host process, placing data on the wire, and hoping it arrives at its destination without any follow-up. TCP uses sequencing and acknowledgments to guarantee the delivery of packets.

Unlike its counterpart UDP, when TCP receives a stream of data (messages), it breaks the streams into segments and assigns sequence numbers to each byte prior to delivery by IP within a datagram. These sequence numbers require corresponding acknowledgments to be returned from the destination to ensure it has received from the sender each segment within the datagram. TCP maintains a copy of the segments contained within a buffer at the host, known as a TCB (transmission control block). If it does not receive an acknowledgment, it assumes the datagram has been lost and retransmits it. We discuss this in more detail later in this chapter.

Connection Setup and Teardown

To provide reliable data delivery between processes, TCP must make a connection before the upper-layer applications can exchange any meaningful data. To accomplish this, TCP establishes a connection known as a logical circuit between the remote host ports first. This connection links ports or processes running within each host. TCP maintains this connection throughout the entire conversation and tears down the connection when it is no longer needed.

Once IP learns the logical address of the destination host, TCP sets up a session that provides the reliable foundation for the upper-layer protocols to deliver data. When the user or one of the hosts requests to close a session, TCP tears the session down. We discuss the session setup and teardown procedures and exchanges in more detail later in this chapter.

Multiplexing

Multiplexing capability enables TCP to establish and maintain multiple communication paths between two hosts simultaneously. Multiplexing also allows a single host to distinguish and maintain sessions with many hosts simultaneously. Hosts need this capability because usually, they run multiple applications or services such as Telnet, FTP (File Transfer Protocol), or other services. TCP has to distinguish one process from another and manage and maintain the communications for these processes.

To accomplish this, TCP utilizes ports to differentiate communications and manage them. There are two main port types: server ports and client ports. Server ports identify major applications or services; for example, Telnet (port 23), SMTP (port 25), and FTP (ports 20, 21). Client ports vary; they are chosen on the fly and dynamically applied, ranging 1024–65535.

Data Transfer

TCP receives and organizes streams of data (messages) from upper-layer processes or applications as segments and passes them down to be formatted as datagrams by IP (Network layer) for addressing, packing, and delivery. When IP receives datagrams from a remote host, it inspects the protocol address within the IP header to determine whether to send the information through TCP or UDP for processing.

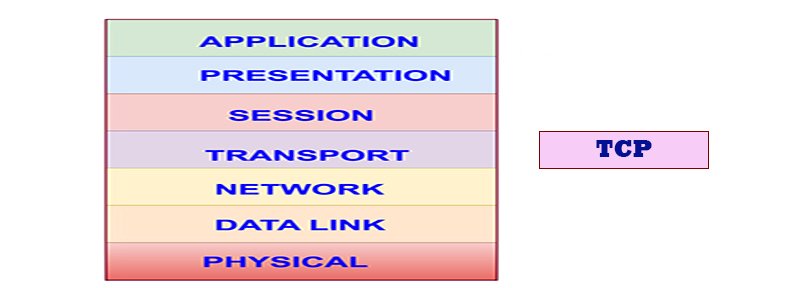

TCP runs on top of the Internet Protocol that provides Network Layer addressing and connectionless delivery of datagrams between hosts. The protocol type value 06 identifies TCP within the IP header. The protocol type value 17 identifies UDP within the IP header.

When TCP receives segments within datagrams from IP it reassembles them into organized data streams (messages), identifies the receiving client or server port, and passes them on to the appropriate (upper-layer) application for processing. The upper (applications) and lower (Internet Protocol) layers have a bi-directional relationship depending on the direction of the data flow. TCP provides the same fundamental services to all upper-layer protocols. This is a simplistic view of how TCP operates; we will discuss TCP operation in more detail later in this chapter.

Flow Control

TCP needs a method of controlling the inbound flow of data. Flow control guarantees that incoming traffic does not overwhelm a host’s receive buffer and that the receiving host can adequately process and respond to the sending host’s requests. The window mechanism identified within the TCP header provides this function. We will take a detailed look at flow control and the TCP header later in this chapter.

Each end host maintains its own window and advertises this window to the other side. When congestion occurs, a host reduces its window size and advertises it to the other side. In effect, the host asks the other side to slow down its transmissions.

When congestion no longer exists, a host can increase the size, alerting the other side that it can send more data. The capability to dynamically increase or decrease the window as needed is referred to as a sliding window. An administrator can configure the initial window size at the host. This configuration varies depending on the operating system used.

Reliability

Reliability comes from TCP’s guaranteed delivery of packets. TCP requires the sequencing of each byte sent and a corresponding acknowledgment of each byte from the other side. This enables a host to detect whether information has been lost or sent out of order.

The receiving host does not send an ACK if datagrams become lost in transit. The transmitting (sending) host has the task of detecting lost or missing frames and retransmits if necessary. If the sending host does not receive an acknowledgment within a specified period of time, it retrieves a copy of the previously sent information from its TCB buffer and retransmits the lost data. The sending host uses a timer based on a round-trip delay calculation to detect a lost frame and retransmit. If a timer expires before receiving an acknowledgment of sent data, the sending host assumes the datagram is lost and retransmits.

TCP deals with damaged frames through a CRC field contained within the TCP header. The sending host performs a CRC calculation before transmitting. The receiving host performs a CRC check upon receipt to determine whether a datagram has been damaged while in transit.

If the receiving host detects a damaged datagram it simply trashes the frame without notifying the source host. Eventually, the source host realizes something has happened to this frame because it has not received a corresponding acknowledgment from the receiving host. At this point the TCP timer expires, causing this host to retransmit the data.

Precedence and Security

The DoD mandates that all protocols implemented within its networks support a multilevel security model and precedence levels. TCP can utilize the service and security options within IP to provide this level of service to upper-layer applications. TCP offers these types of services to upper-layer applications:

- Precedence

- Delay

- Throughput

- Reliability

The options within the Type of Service field of the IP header indicate how a datagram should be handled. When implemented, the Type of Service options, precedence delay, throughput, and reliability influence route selection when delivering datagrams. For example, if an application requires a datagram to be sent by a fast path when there is more than one path to a destination, it can request routers to send the frame over the path offering the lowest delay.

The first option, known as precedence, indicates whether this datagram carries routine or high priority (precedence) information; the higher the precedence level, the higher the security level. A host with a mismatched or lower precedence (security level) cannot establish a connection to a process with another host having a higher security level. Thus a host rejects a connection request based on a multilevel security basis.

Although the IP header contains the Type of Service options, TCP can make use of these functions on a per-connection basis through bi-directional communication. TCP can store these options in its TCP memory buffer to provide increased security and more efficient delivery of application data.

Connection-oriented Characteristics

As we know, protocols fall into one of two categories: connection-oriented or connectionless. As a connection-oriented protocol, TCP implements all six of these basic characteristics:

- Session setup

- Teardown

- Sequencing

- Acknowledgements

- Keepalives

- Flow control

Session Setup

Socket Pairing

Session Teardown

Sequencing and Acknowledgements

Retransmission

Timers

Keepalives

Congestion

Flow Control

TCP Ports

TCP uses well-known ports to establish a client-server relationship. TCP uses these ports at the Transport layer to identify which upper-layer processes have sent or should receive data streams.

| Decimal | Keyword | Protocol(s) | Description |

| 20 | FTP-Data | TCP | File Transfer Protocol (Data) |

| 21 | FTP | TCP | File Transfer Protocol (Control) |

| 23 | Telnet | TCP | Telnet |

| 25 | SMTP | TCP | Simple Mail Transfer Protocol |

| 49 | LOGIN | TCP | Login Host Protocol |

| 53 | DNS | TCP/UDP | Domain Name Service |

| 63 | VIA-FTP | TCP | VIA-Systems-FTP |

| 70 | Gopher | TCP | Gopher File Service |

| 80 | WWW | TCP | World Wide Web Services |

Books you may interested

|  |  |  |  |

|  |  |